How might organizations become more effective in converting analytics into management actions?

Being data enabled is not about having dashboards displayed on big screens. Dashboards are only stepping stones for asking richer questions, undertaking deeper analysis and discovering more polished insights.

How might an organization’s consumer or operational data be put to the best use by its decision-makers?

This needs collaboration:

- The key task for analysts is to turn a finding into an insight. Make it actionable. Validate a course of action or recommend change.

-

Collaborate with the business: You don’t have to do this alone. In fact, it is crucial that you don’t. A key step to transforming a finding into an insight is understanding the domain (the industry whose data you’re analysizing). Business stakeholders can help you sense check your findings & translate it into the most actionable message for decision makers. These stakeholders can be anyone that understand the subject. For example, in a software company, they could be developers, designers, product managers or other leads.

-

Build your domain knowledge: You can always ask stakeholders but, finally, it is you that has to cross the chasm from numbers into meaning. Level up your subject understanding by asking for recommendations to reliable material (docs, books, videos, etc.) so that you can continuously build bridges from information to knowledge1.

-

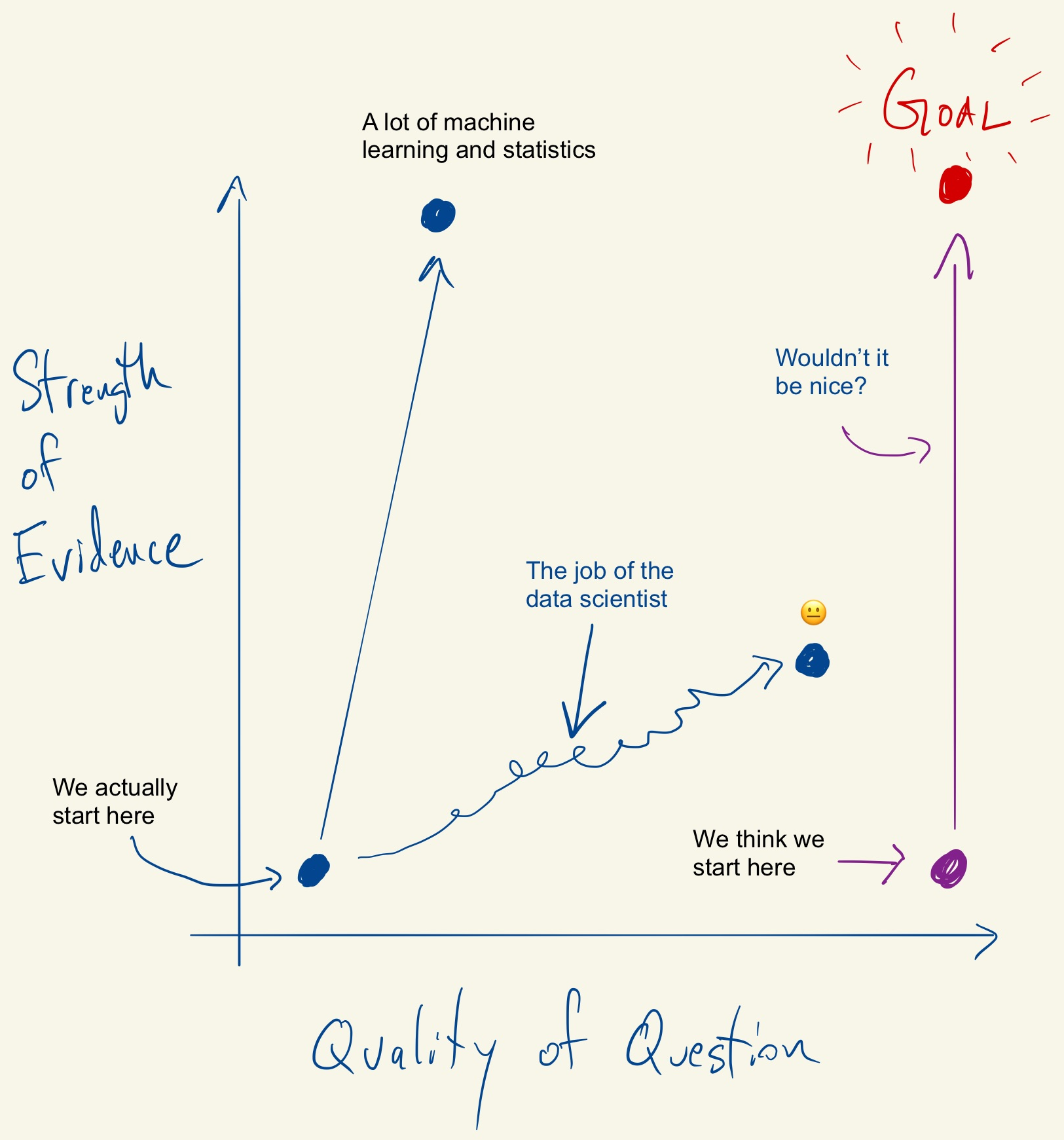

Help the business improve the quality of their question. More on this below.

By Roger Peng

By Roger Peng

-

- The key task for decision-makers is to build a hinge for action. What do you do once you have the analytics solution? What kind of action do you take?

-

Collaborate with your resident data science/analytics team to improve the quality of your question: Dig into the WHY before the project begins. What would you do if you had the analytics solution/answer today? How would you use it? This ensures that the analysis being done has a strong hinge on (pivoting) action.

-

Disclose your expectations: Get comfortable with sharing your estimate. When we ask a question, we usually have an estimate that we aren’t yet certain about. Pencil this expected result - better yet, share it with your team.

It’s okay if it turns out wrong. What is not okay is to lose the opportunity to learn from it. Our estimations are based on existing knowledge, information we consumed from different media, maybe some first or second-hand research. When the findings match the estimation, it validates our methods. When findings differ from estimations, it helps us learn why - perhaps, we missed a piece of the puzzle, perhaps there were too many variables. After all, the objective isn’t to achieve accuracy (that’s for our data teams).

The objective is to peg your estimation to 2 courses of action: what you expect to do if your estimate is correct and if it isn’t.

Rough is good. We don’t need a strategy plan yet. We only need a guide to propel you into clear action.

For example, when investigating the app langauge set by your users, let’s say that you expect it to be English. If most users default to a non-English language, you may choose to investigate into the cost-benefit of offering a language switch option upfront before users dive into your app. -

Set targets/thresholds: This is another way to ensure action. It borrows from the practice of estimating: here, you are explictly estimating a future outcome and pegging actions to 2 scenarios: achieving the target (you may choose to set a higher target next or keep it consistent), not achieving the target (you will begin investigating what needs to change). Target setting is another practice that only gets better the more we do it.

-

- Back to analysts: be aware of your anchoring bias to not be swayed by it once you have the business’s expected results. Hypothesis testing preps us for this. Remember your training.

How might organizations become more effective in converting analytics into management actions?

It all comes down to a process shift. Think a culture shift that encourages estimations and learning from failures because there is no innovation without risk-taking.

Being data enabled is not about having dashboards displayed on big screens. Dashboards are only stepping stones for asking richer questions, undertaking deeper analysis and discovering more polished insights.

This article is inspired by Gartner’s question: ‘Decisions: Can Data & Analytics make them better?’

-

The Data-Information-Knowledge-Wisdom (DIKW) Pyramid is explained here by Datacamp in their free data literacy course. ↩